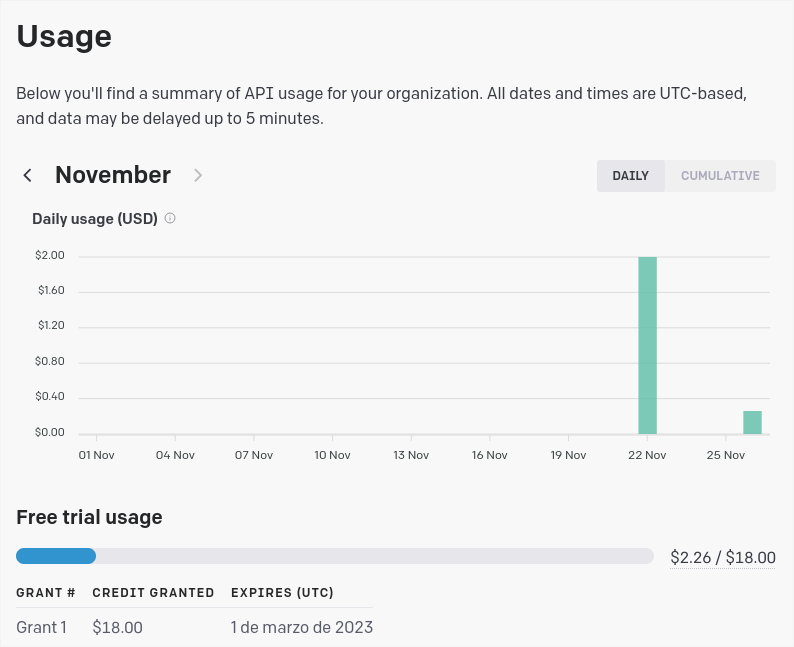

AI image generation has a high computational cost. Don’t trust the speed of Dall·E 2 API that we saw in this post; if these services are usually paid, it’s for a reason and, apart from the online services that we also saw, running AI in an average computer is not so simple.

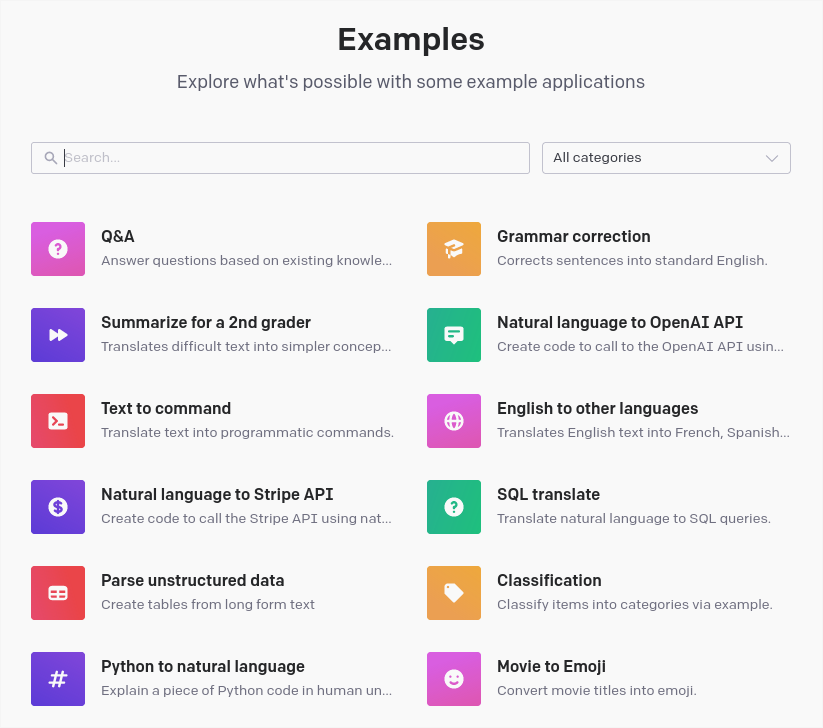

After trying, in vane, some open-source alternatives like Pixray or Dalle-Flow, I finally bring the most simple of them: dalle-playground. This is a starting version of Dalle, so you won’t obtain the best of the results.

Despite this, I will soon bring an alternative to Dall·E (Stable Diffusion from stability.ai), which also supports a version optimized by the community for low resources PCs.

Requirements

Hardware

Just for you to picture it, Pixray recommends a minimum 16GB of VRAM and Dalle-Flow 21GB. VRAM or virtual RAM is the rapid access memory in your graphics card (don't confuse it with the usual RAM memory).

A standard laptop like mine has a Nvidia GeForce GTX1050Ti with 3GB dedicated VRAM, plus 8GB RAM on board.

With this minimum requirement, and some patience, you can run Dalle-playground locally in your PC, although it also requires an internet connection to check for updated python modules and AI checkpoints.

If you have one or several more powerful graphic cards, I would recommend trying Pixray, as it installs relatively easy and it's well documented and extended.

https://github.com/pixray/pixray#usage

Software

Software requirements aren't trivial either. You'll need python and Node.js. I will show the main steps for Linux, which is more flexible when installing all kinds of packages of this kind, but this is equally valid for Windows or Mac if you manage yourself on a terminal or using docker.

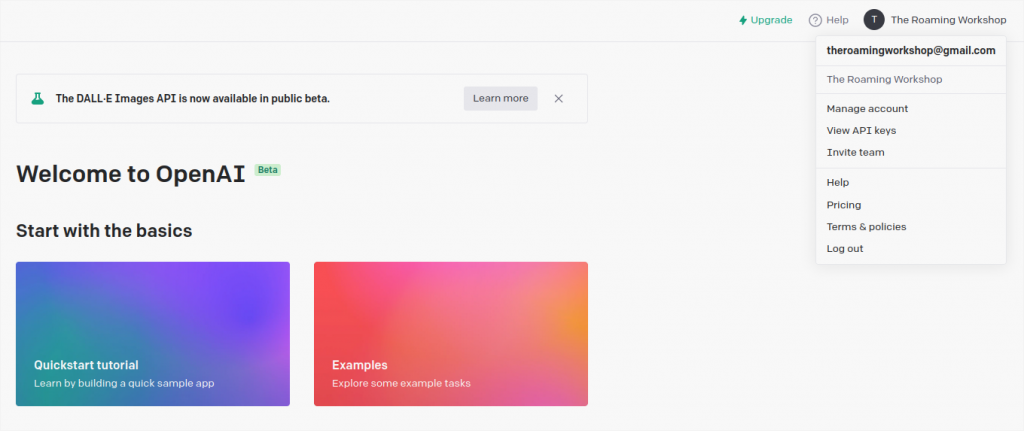

Download dalle-playground

I found this repository by chance, just before it was updated for Stable Diffusion V2 (back in November 2022) and I was smart enough to clone it.

Access and download all the repository from my github:

https://github.com/TheRoam/dalle-playground-DalleMINI-localLinux

Or optionally download the original repository with Stable Diffusion V2, but this requires much more VRAM:

https://github.com/saharmor/dalle-playground/

If you use git you can clone it directly from the terminal:

git clone https://github.com/TheRoam/dalle-playground-DalleMINI-localLinuxI renamed the folder locally to dalle-playground.

Install python3 and required modules

All the algorithm works in python in a backend. The main repository only mentions the use of python3, so I assume that previous versions wont work. Check your python version with:

>> python3 -V

Python 3.10.6Or install it from its official source (it's currently on version 3.11, so check which is the latest available for your system):

https://www.python.org/downloads/

Or from your Linux repo:

sudo apt-get install python3.10You'll also need the venv module to virtualize dalle-playground's working environment so it won't alter the whole python installation (the following is for Linux as it's included in the Windows installer):

sudo apt-get install python3.10-venvIn the backend folder, create a python virtual environment, which I named after dalleP:

cd dalle-playground/backend

python3 -m venv dallePNow, activate this virtual environment (you'll see that the name appears at the start of the terminal line):

(dalleP) abc@123: ~dalle-playground/backend$Install the remaining python modules required by dalle-playground which are indicated in the file dalle-playground/backend/requirements.txt

pip3 install -r requirements.txtApart from this, you'll need pyTorch, if not installed yet:

pip3 intall torchInstall npm

Node.js will run a local web server which will act as an app. Install it from the official source:

https://nodejs.org/en/download/

Or from your Linux repo:

sudo apt-get install npmNow move to the frontend folder dalle-playground/interface and install the modules needed by Node:

cd dalle-playground/interface

npm installLaunch the backend

With all installed let's launch the servers, starting with the backend.

First activate the python virtual environment in the folder dalle-playground/backend (if you just installed it, it should be activated already)

cd dalle-playground/backend

source dalleP/bin/activateLaunch the backend app:

python3 app.py --port 8080 --model_version miniThe backend will take a couple of minutes (from 2 to 5 minutes). Wait for a message like the following and focus on the IP addresses that appear at the end:

--> DALL-E Server is up and running!

--> Model selected - DALL-E ModelSize.MINI

* Serving Flask app 'app' (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

INFO:werkzeug:WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:8080

* Running on http://192.168.1.XX:8080Launch frontend

We'll now launch the Node.js local web server, opening a new terminal:

cd dalle-playground/interfaces

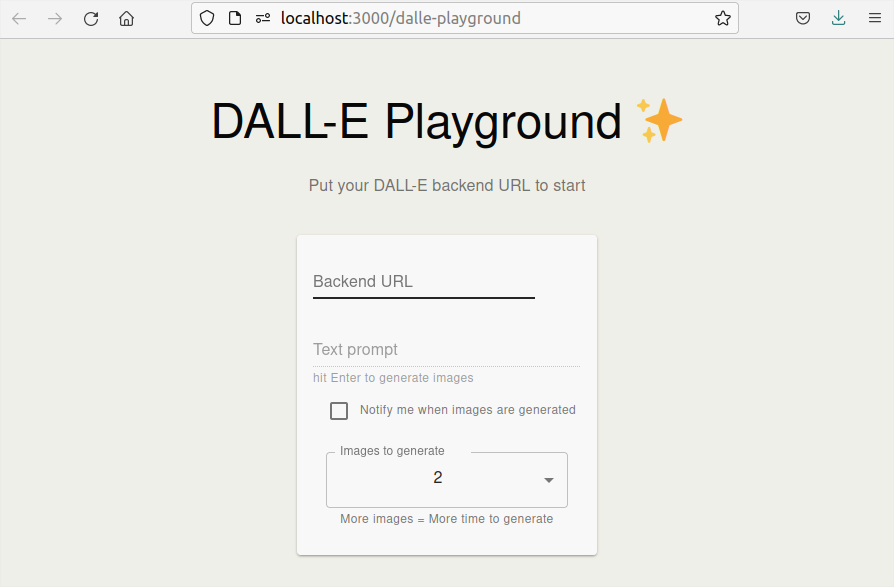

npm startWhen the process finishes, it will launch a web browser and show the graphical interface of the app.

Automatic launcher

In Linux you can use my script launch.sh which starts backend and frontend automatically following the steps above. Just sit and wait for it to load.

launch.sh

backend.sh

frontend.sh

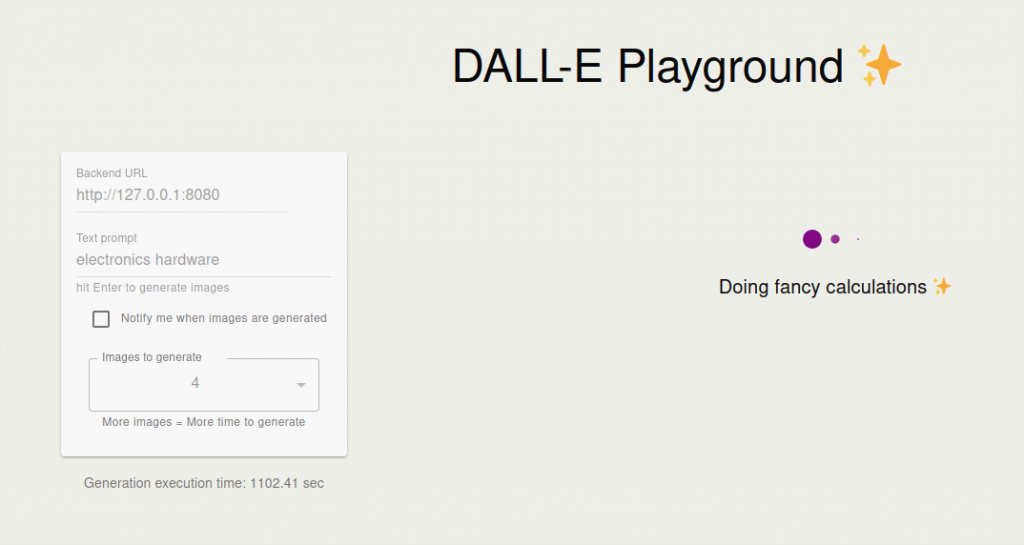

App dalle-playground

In the first field, type the IP address for the backend server that we saw earlier. If you're accessing from the same PC, you can use the first one:

http://127.0.0.1:8080But you can access from any other device in your local network using the second one:

http://192.168.1.XX:8080Now introduce the image description to be generated in the second field, and choose the number of images to show (more images will take longer).

Press [enter] and wait for the image to generate (about 5 minutes per image).

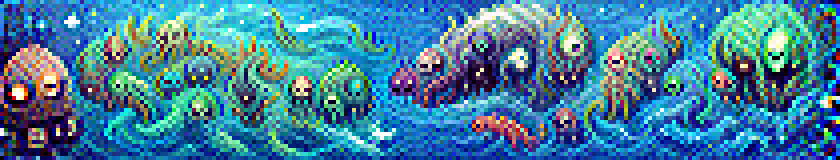

And there you have your first local AI generated image. I will include a small gallery of results below. And in the next post I will be showing how to obtain better results using Stable Diffusion, also for lower than 4GB VRAM.

You know I await your doubts and comments on 🐦 Twitter!

Gallery: Dalle-playground

Note: original images at 256x256 pixels, upscaled using Upscayl.